Building a Unified API Architecture for Reliability, Security, and Observability

Every modern SaaS product eventually needs to connect with multiple systems. These could include EHR and EMR platforms, CRMs, HR tools, accounting systems, or AI services. Each has its own API design, authentication rules, and data formats.

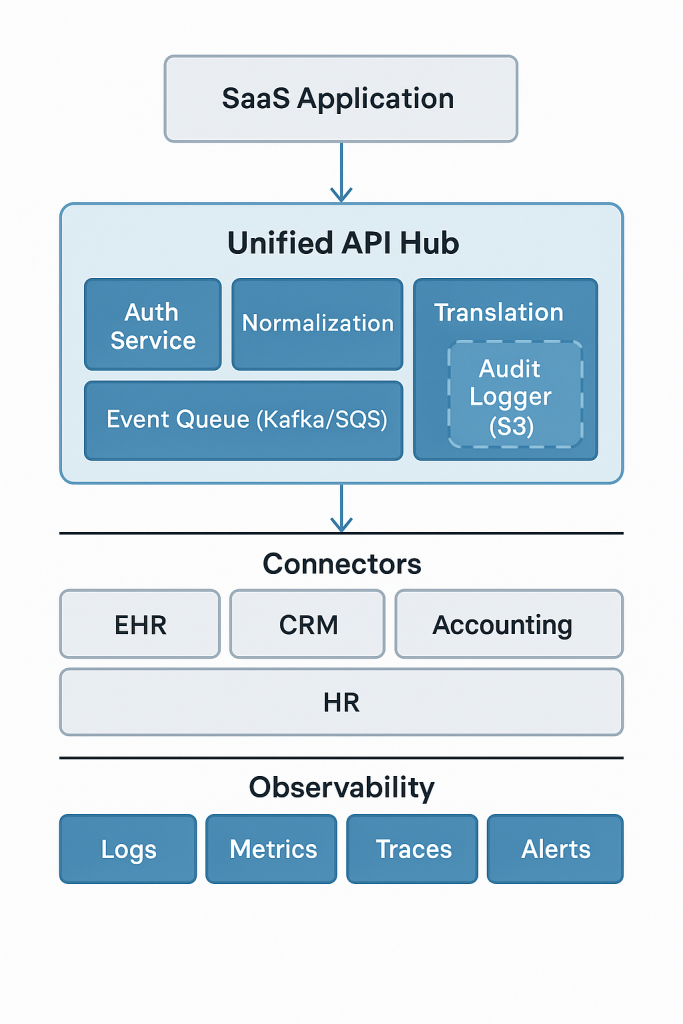

Without a unified approach, every integration adds new complexity. The solution is to build a unified API architecture that provides one reliable, secure, and observable layer for all integrations.

This guide walks through how to design that architecture from a system design point of view. It includes support for microservices, asynchronous processing, event-driven design, real-time data through Kafka, decoupling with SQS, and secure audit logging to S3.

For related topics, see:

- What Is a Unified API and How It Simplifies Integration Across Platforms

- How Data Normalization Keeps Your Unified API Consistent Across Platforms

- Designing a Centralized Unified API Hub for Scalable SaaS Platforms

Overview of the Unified API Architecture

A unified API provides one consistent way for your SaaS product to communicate with multiple third-party services.

+----------------------------+

| SaaS Application |

+-------------+--------------+

|

v

+--------+---------+

| Unified API |

+--------+---------+

|

---------------------------------------

| | |

+---v----+ +----v----+ +------v------+

| CRM | | EHR/EMR | | Accounting |

| Vendors| | Vendors | | Vendors |

+--------+ +----------+ +-------------+

This architecture ensures that all integrations follow the same flow for authentication, logging, monitoring, and error handling.

1. Abstraction Layer

The abstraction layer hides the vendor-specific differences and provides a common interface for internal developers.

Responsibilities:

- Request routing

- Schema validation

- Input sanitization

- Unified response structure

Client Request

|

v

[Abstraction Layer] -> [Vendor Connector]

This layer allows the system to evolve without breaking client integrations.

2. Data Normalization and Transformation

Since each vendor has a different data model, normalization ensures all outputs follow one schema.

Example mapping:

| Vendor | Raw Field | Normalized Field |

|---|---|---|

| Salesforce | FirstName | first_name |

| Epic | patient_name | first_name |

| QuickBooks | CustomerName | first_name |

It also standardizes types such as ISO date formats, numeric precision, and currency fields.

This step ensures consistent analytics, testing, and AI workflows.

See: How Data Normalization Keeps Your Unified API Consistent Across Platforms.

3. Single Endpoint and Gateway

A unified API exposes one public endpoint:

https://api.yourservice.com/v1/

Requests flow through an API Gateway that enforces rate limits, authentication, and routing.

Example tools: AWS API Gateway, Kong, or NGINX.

Client --> [API Gateway] --> [Unified API] --> [Connectors]

This design centralizes access control, monitoring, and versioning.

4. Simplified Authentication

Vendors use different authentication standards such as OAuth or API keys. The unified API manages all of these behind one consistent token system.

Client

|

v

[Unified Auth Service]

|

+--> Vendor A (OAuth)

+--> Vendor B (API Key)

+--> Vendor C (JWT)

You can store credentials securely using AWS Secrets Manager or Vault, and rotate them automatically.

This keeps your API secure and compliant without duplicating logic across connectors.

5. Translation Layer

The translation layer converts unified API requests into vendor-specific formats. It handles field mapping, pagination, and protocol changes.

Unified Request --> [Translation Layer] --> Vendor Request

Vendor Response --> [Translation Layer] --> Normalized Output

This layer allows legacy vendors using SOAP or XML to still work seamlessly with your unified API.

6. Microservices Architecture

The unified API should be composed of multiple microservices instead of a single large system.

Example service split:

gateway-servicefor routingauth-servicefor authenticationnormalization-servicefor schema mappingconnector-servicefor each vendorevent-servicefor async tasksaudit-servicefor logs

+--------------------------------------------------------+

| Unified API Hub |

+--------------------------------------------------------+

| Gateway | Auth | Normalization | Event | Audit | Queue |

+--------------------------------------------------------+

| Connector A | Connector B | Connector C |

+--------------------------------------------------------+

Microservices make the system easier to scale and update independently.

7. Asynchronous and Event-Driven Design

Not all API calls should happen in real time. Some data exchanges take longer, and some should run in the background.

The unified API can push long-running or bulk sync operations into a message queue using AWS SQS, Kafka, or RabbitMQ.

Client Request --> Unified API --> Message Queue --> Worker Service --> Vendor API

- Kafka is used for real-time data streaming or event broadcasting to multiple consumers.

- SQS provides reliable, decoupled task handling for background processing.

This structure ensures smooth performance under load and isolates slow vendors from affecting the overall API response time.

8. Real-Time Data Exchange with Kafka

Kafka can be used to stream live updates between systems. For example, when a customer is created in a CRM, the unified API can publish an event to a Kafka topic. Other microservices, such as analytics or notification systems, can consume these events instantly.

CRM Event --> Kafka Topic --> Unified API Consumer --> Normalization Service --> Database

This supports near real-time synchronization across systems without polling.

Kafka’s partitioning and offset management also make it easy to scale horizontally while ensuring data consistency.

9. Decoupling and Queues with SQS

SQS is ideal for asynchronous processing where you want reliable decoupling between components.

For example, when an external API call fails, you can push it to a retry queue:

API Hub --> SQS (Retry Queue) --> Worker (Retry Service)

This prevents the main API thread from blocking and improves reliability under vendor downtime.

You can also use DLQs (Dead Letter Queues) to capture persistent failures and analyze them later.

10. Audit Logging to S3

For compliance and traceability, the unified API should keep an immutable record of all critical operations.

Instead of storing audit logs in the main database, write them to Amazon S3 in structured JSON files.

Example entry:

{

"event_id": "a12345",

"timestamp": "2025-11-10T20:32:00Z",

"user": "api_client_23",

"action": "CREATE_PATIENT",

"vendor": "Epic",

"status": "success"

}

S3 provides durable, low-cost storage and integrates with tools like Athena for query-based audits.

11. Observability and Monitoring

Each request should be traced end-to-end across microservices.

You can use:

- Prometheus and Grafana for metrics

- ELK Stack or CloudWatch Logs for centralized logging

- OpenTelemetry for distributed tracing

+-------------------------------------+

| Observability and Monitoring Layer |

+-------------------------------------+

| Metrics | Logs | Traces | Alerts |

+-------------------------------------+

Include a correlation ID in every log entry to trace a request through Kafka, SQS, and connectors.

12. Reliability

Reliability ensures that the unified API keeps functioning even if some vendors fail.

Techniques include:

- Caching responses with Redis

- Retrying failed requests with backoff

- Circuit breakers to prevent cascading failures

- Graceful degradation when vendor data is missing

This gives your clients predictable responses even under unstable conditions.

13. Security

A strong security model protects user data and credentials.

Key practices:

- TLS on all connections

- Role-based access control

- Input validation at the gateway

- Token rotation and encryption

- Audit trails for all privileged actions

With centralized auth and logging, every request can be verified, logged, and reviewed.

14. Scalability

Each component scales independently. For example:

- API Gateway scales with client requests

- Worker services scale based on queue size

- Kafka consumers scale with partitions

- Normalization and translation services scale by CPU load

Deploy these components using Kubernetes or Docker Swarm for automatic scaling and fault tolerance.

15. Long-Term Benefits

This architecture delivers lasting advantages:

- Faster integration with new systems

- Lower cost of maintenance

- High availability during vendor outages

- Easier compliance with audit and data laws

- Real-time data exchange through Kafka

- Decoupled and reliable background processing with SQS

- Permanent traceable logs in S3

It is flexible enough to support future use cases such as AI data pipelines, real-time dashboards, and automated workflows.

Example Unified API Architecture

+---------------------------------------------------------------+

| Unified API Hub |

|---------------------------------------------------------------|

| API Gateway | Auth Service | Normalization | Translation |

|---------------------------------------------------------------|

| Event Queue (Kafka/SQS) | Worker Services | Audit Logger (S3) |

|---------------------------------------------------------------|

| Connectors: EHR | CRM | Accounting | HR | AI |

|---------------------------------------------------------------|

| Observability: Logs | Metrics | Traces | Alerts |

+---------------------------------------------------------------+

Summary

A unified API architecture creates a secure, reliable, and observable foundation for modern SaaS integrations.

It simplifies vendor connections, centralizes security, and introduces asynchronous and event-driven capabilities with Kafka and SQS.

With S3 for audit logs and microservices for modular scaling, this design provides long-term flexibility and resilience.

For more related reading:

- What Is a Unified API and How It Simplifies Integration Across Platforms

- How Data Normalization Keeps Your Unified API Consistent Across Platforms

- Designing a Centralized Unified API Hub for Scalable SaaS Platforms

A well-built unified API is not just a technical layer. It is the core foundation that keeps your SaaS platform scalable, secure, and ready for real-time data exchange.